We recently came across an interesting comment on a blog post at AllFacebook.com. The post was about a study indicating that Facebook can increase the risk of eating disorders, and the comment was from Dee Christoff, Vice President of the National Eating Disorders Association (NEDA), who said that NEDA and Facebook are working together to "make the site safer for those who may be vulnerable to eating disorders or self harm."

One particular part of her comment caught our eye. She said, "We are working on the language and processes for warning, reporting and/or removing photos, status, posts and comments that indicate imminent threat or harm – or that are inappropriate and could serve as triggers for eating disorders. Facebook is also developing an FAQ with us about EDs and referring individuals to resources for themselves or someone they may be concerned about."

Curious about how such a mechanism would take place without violating user privacy, we reached out to both Christoff and Facebook to find out more. Facebook spokesperson Malorie Lucich gave us the following statement on the matter: "We don’t have anything specific to share, but we work with a variety of Internet safety groups in conjunction with our Safety Advisory Board to constantly refine and improve our reporting infrastructure and FAQs to provide a safe environment for all our users."

Christoff had a little more in the way of explanation, though details on the project have yet to be worked out. Basically, it sounds like this will just be integrated with Facebook’s existing content reporting capabilities. She tells WebProNews, "Facebook has asked us to help them with their reporting flows that allow people to report unsafe, offensive or objectionable behaviors. Depending on the severity or reason, the reporting process can lead to Facebook removing photos, statuses, comments, wall postings or groups; warnings to the person or groups who posted them; and/or removal from the site. It also allows FB to provide an FAQ about eating disorders and lead people to helpful resources. We’re assisting them with their procedures and language for self harm related to eating disorders."

As they’re still in the process of figuring everything out, Christoff says, "I can’t say exactly what the reporting mechanisms will be."

"Facebook has a reporting process," she explains. "So far, I’ve looked at examples that Facebook provides regarding suicide prevention and warning groups because photos or content has been removed."

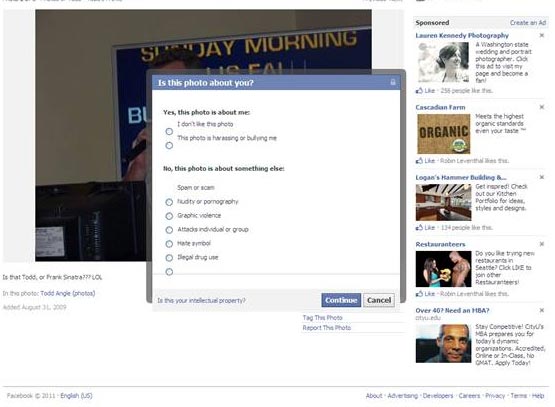

You can already look at any photo on Facebook, and find a link to report that photo. Options that are currently available include things like: "I don’t like this photo," "This photo is harassing or bullying me," "nudity or pornography," "graphic violence," "attacks individual or group," "hate symbol," and "illegal drug use."

I guess the wording we can look for in the future is part of what is still being determined.

Now, just because someone reports a photo doesn’t necessarily mean that Facebook will take action. As Christoff notes, "FB researches it and makes a determination of whether it should be removed and/or whether the person posting such material should be warned or provided with further information and/or resources."

Christoff says that basically, Facebook is just concerned about self harm and wants assistance from groups like NEDA with how to identify the language and possibly images that raise flags, and to assist with their reporting flow, FAQs on the subject and to link people with resources so that they can find help.

It remains to be seen what kind of language and photos might raise such flags, but you can see Facebook’s policies on various other content removal areas here.

WebProNews is an iEntry Publication

WebProNews is an iEntry Publication